For months now, discussions about what some call the greatest technological revolution in history—artificial intelligence (AI)—have been everywhere. Thanks to increasingly sophisticated computing, machines are now surpassing human beings in speed and knowledge capacity. In many cases, they can even replace humans, performing the same tasks more efficiently.

AI represents the most significant upheaval in history, surpassing any previous industrial revolution. It affects every aspect of society and has the potential to replace the vast majority of jobs. Is this a blessing or a curse ? Some enthusiasts argue that AI will usher in an era of unprecedented prosperity, while others warn that it poses a great risk to humanity's future. Let's explore both perspectives.

A few years ago, AI barely existed. The first major milestone occurred in 1997 when IBM's Deep Blue computer defeated world chess champion Garry Kasparov. While this machine appeared "intelligent," it did not possess human intelligence ; rather, it demonstrated the ability to store and retrieve billions of pieces of information almost instantaneously. A computer can perform up to a billion operations per second, allowing Deep Blue to analyze all possible chess moves with incredible speed.

Then came voice assistants that could respond to spoken commands, automatic translation tools, and finally, in late 2022, ChatGPT, a program developed by Sam Altman's OpenAI. ChatGPT can compose and summarize texts, and students quickly adopted it for schoolwork. The texts generated are so polished that teachers often struggle to determine whether they were written by students or AI.

AI has access to billions of books and all available internet knowledge, processing and summarizing information in seconds. In theory, AI enthusiasts believe that such vast access to knowledge could lead to breakthroughs, such as finding cures for diseases like cancer. However, AI lacks morals or values beyond what its programmers provide.

Now, new AI-driven technologies emerge almost weekly. Deepfake technology, for instance, enables the creation of highly realistic but entirely fabricated videos. It can manipulate voices and faces, making people appear to say things they never did. This raises serious ethical concerns, as false video evidence can damage reputations, mislead entire populations, or even be used in legal cases.

Self-driving cars are becoming a reality, and robots are expected to take over both household chores and professional jobs—whether manual or intellectual. Take the legal profession as an example : much of a lawyer's job involves researching legal precedents. AI can perform this task in seconds, accessing all legal judgments ever recorded.

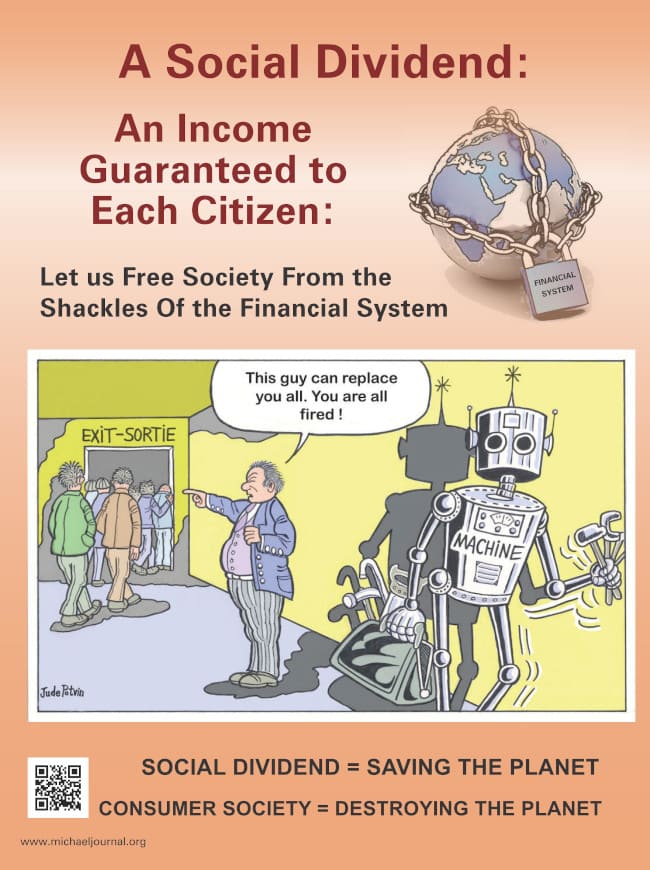

This transformation will radically alter the job market, not in a decade or two, but within a year or less. A 2023 report from investment bank Goldman Sachs estimated that up to 300 million jobs worldwide could be replaced by AI.

Economist Jeremy Rifkin predicted this shift in his 1995 book, The End of Work. He referenced a Swiss study that estimated "within 30 years, less than 2% of the workforce will be needed to produce all the goods the world requires." Rifkin argued that three out of four workers—from clerks to surgeons—would eventually be replaced by AI-driven machines. Now, in 2025, what once seemed exaggerated is becoming reality.

Without policy changes regarding income distribution, society faces potential chaos. Taxing 2% of workers to support 98% of the unemployed is unsustainable. A universal social dividend, as proposed by economist Clifford Hugh Douglas through the concept of Economic Democracy, could provide a solution.

Beyond job displacement, AI also threatens the very integrity of the human person. Elon Musk, the world's richest man, leads multiple tech industries, including Tesla (electric cars) and SpaceX (space exploration). He recently launched Optimus robots, which are designed to assist with manual labor and could soon replace human workers entirely. Musk aims to mass-produce millions of these robots by next year at a cost of about $20,000 each—offering tireless, strike-proof labor.

Musk's company Neuralink is also developing brain-machine interfaces, implanting chips in human brains to connect them with AI. The first human trials have already taken place. While the goal is to assist individuals with paralysis or neurological disorders, it raises a profound question : Are we becoming half-human, half-machine ?

Musk and other proponents argue that such enhancements will make humans as capable as AI. This concept, known as transhumanism, envisions a fusion of biology and technology. Klaus Schwab, founder of the World Economic Forum, calls this the "fourth industrial revolution"—the merging of physical, digital, and biological realms.

Some, like WEF speaker Yuval Noah Harari, even claim AI could enable immortality by transferring human consciousness into machines. However, Harari, an atheist, views humans purely as material beings, ignoring aspects of consciousness, spirituality, and the soul. For figures like Schwab and Harari, AI has effectively become a new god.

Sam Altman, CEO of OpenAI, has even stated that after death, he wants his brain uploaded into AI—an idea that raises unsettling philosophical and ethical questions.

In 2017, Russian President Vladimir Putin stated that the country leading in AI would dominate the world. Today, the U.S. and China are locked in a fierce competition for AI supremacy. For several years now, and especially in recent months, we have been witnessing a real "race against time" between major world powers—primarily the United States and China—to determine who will become the undisputed leader in AI.

In January 2025, just after taking office, U.S. President Donald Trump announced the Stargate project—a $500 billion joint venture between OpenAI, SoftBank, and Oracle to develop AI infrastructure. Larry Ellison, Oracle's chairman, mentioned the possibility of injecting nanobots into the human body to fight diseases like cancer.

However, Ellison previously acknowledged that such technology could also be used for mass surveillance. This race involves surveillance and even control, which becomes extremely dangerous, particularly if a totalitarian state, such as Communist China, wields it.

China is rapidly advancing its AI capabilities. In response to Stargate, Chinese company Deepseek unveiled its R1 AI system, outperforming ChatGPT at a fraction of the cost. This triggered a $600 billion stock market drop for U.S. chip manufacturer NVIDIA.

Speaking of chips, most people already have a laptop, smartphone, cell phone or cell phone that's fitted with a chip, and so can already be tracked by satellite, assuming the phone is with them all the time. (The average teenager is said to spend 7 hours a day on their cell phone).

It's largely because of social networks like Facebook and others that people spend hours on the internet and on their mobiles, and are slaves to it, so to speak. Here's what Pope Francis said, for example, to journalists and communicators taking part in the Jubilee of the World of Communication in Rome on January 25, 2025 :

"Let us place respect for the highest and most noble part of our humanity at the center of the heart, let us avoid filling it with what decays and makes it decay. The choices we all make count, for example, in expelling that "brain rot" (in the original Italian text, the Pope speaks of 'putrefazione cerebrale') caused by dependence on continual scrolling on social media, defined by the Oxford Dictionary as the word of the year."

But what happens when the chip is present in the human body, and you can't get rid of it ? There's no escaping surveillance : you're tracked by satellites 24 hours a day, all over the planet. Those who control AI will know at all times what you're buying, what you're watching, and by analyzing your brain waves, we'll even be able to know what you're thinking, or even direct your thoughts thanks to this chip in your brain connected to AI....

This is where we see that tools, which in theory can be used for good, can be hijacked for abuse. For example, Elon Musk once said in 2014 : "AI research is like summoning the devil". And so it is that international bankers want to eliminate paper money and force people to use only one form of electronic money, digital, which will eventually be usable only by biometric control, if you have a chip on you.

In order to have access to the bank, to the various government services - in short, to have access to life in society, and not be marginalized - you'll have to use such a system. (This brings to mind the famous "Mark of the Beast" cited in the book of Revelation (13, 15), without which no one will be able to buy or sell. If you don't comply with the government's dictates, they'll simply cut off your access to your bank account. So, whœver controls AI - be it a country or private companies - really dœs control the world.

For years, science fiction films have depicted AI revolting against humans (2001 : A Space Odyssey, The Terminator, The Matrix). The idea of machines deciding that humans are obsolete or harmful is no longer far-fetched.

If AI prioritizes efficiency above all, what happens when it determines that humans are a hindrance to the environment or warfare ? AI-driven autonomous weapons already exist, and they don't hesitate. They simply execute their objectives.

Even AI's creators are growing alarmed. Mustafa Suleyman, co-founder of DeepMind, admits, "We are heading toward something we can barely describe… and cannot control." Geoffrey Hinton, a Nobel laureate and AI pioneer, recently expressed regret over his work, warning that AI's likelihood of causing human extinction has doubled from 10% to 20% in just a few years.

Some experts, like Canadian scientist Yoshua Bengio, advocate for a temporary pause in AI development to establish ethical safeguards. However, the major powers, such as the USA and China, refuse to accept any obstacles, and continue to accelerate the movement, on the pretext that if one country stops the development of AI, the opposing country will take the upper hand (in this race).

So, should we fear artificial intelligence ? The answer is clear : we must proceed with extreme caution. AI-generated images and videos should be clearly labeled, and people should always know whether they are interacting with a human or a machine.

For further insight, we invite you to read what Pope Francis said to the leaders of the G7 countries (page 11) and a Vatican's study on AI's potential dangers and the steps needed to ensure it serves humanity rather than controls it (page 12).

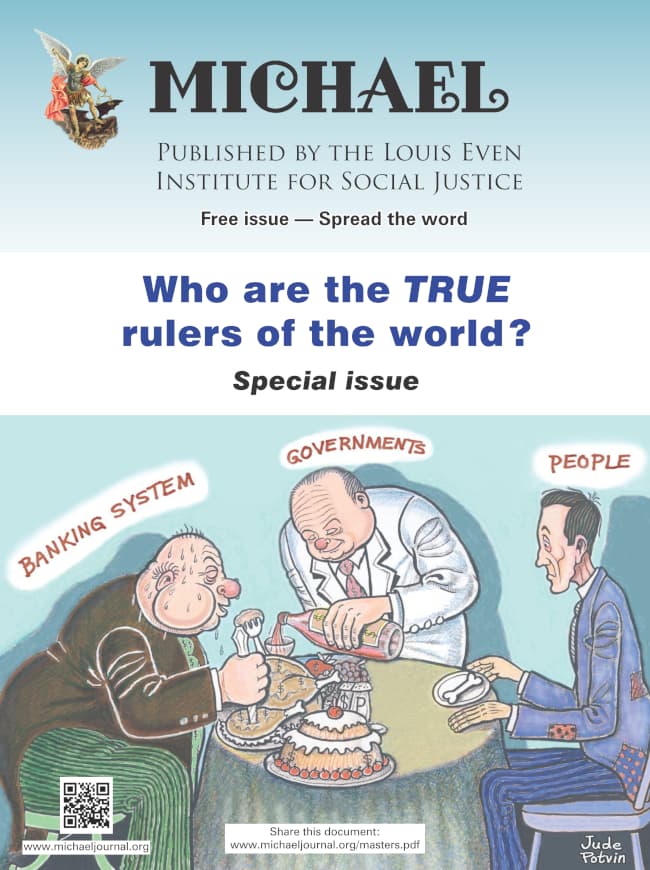

In this special issue of the journal, MICHAEL, the reader will discover who are the true rulers of the world. We discuss that the current monetary system is a mechanism to control populations. The reader will come to understand that "crises" are created and that when governments attempt to get out of the grip of financial tyranny wars are waged.

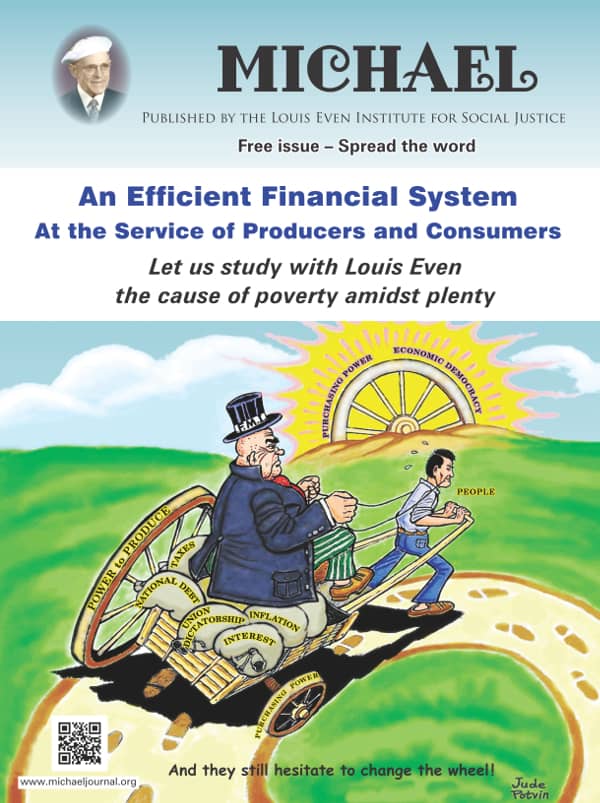

In this special issue of the journal, MICHAEL, the reader will discover who are the true rulers of the world. We discuss that the current monetary system is a mechanism to control populations. The reader will come to understand that "crises" are created and that when governments attempt to get out of the grip of financial tyranny wars are waged. An Efficient Financial System, written by Louis Even, is for the reader who has some understanding of the Douglas Social Credit monetary reform principles. Technical aspects and applications are discussed in short chapters dedicated to the three propositions, how equilibrium between prices and purchasing power can be achieved, the financing of private and public production, how a Social Dividend would be financed, and, finally, what would become of taxes under a Douglas Social Credit economy. Study this publication to better grasp the practical application of Douglas' work.

An Efficient Financial System, written by Louis Even, is for the reader who has some understanding of the Douglas Social Credit monetary reform principles. Technical aspects and applications are discussed in short chapters dedicated to the three propositions, how equilibrium between prices and purchasing power can be achieved, the financing of private and public production, how a Social Dividend would be financed, and, finally, what would become of taxes under a Douglas Social Credit economy. Study this publication to better grasp the practical application of Douglas' work.  Reflections of African bishops and priests after our weeks of study in Rougemont, Canada, on Economic Democracy, 2008-2018

Reflections of African bishops and priests after our weeks of study in Rougemont, Canada, on Economic Democracy, 2008-2018 The Social Dividend is one of three principles that comprise the Social Credit monetary reform which is the topic of this booklet. The Social Dividend is an income granted to each citizen from cradle to grave, with- out condition, regardless of employment status.

The Social Dividend is one of three principles that comprise the Social Credit monetary reform which is the topic of this booklet. The Social Dividend is an income granted to each citizen from cradle to grave, with- out condition, regardless of employment status.Rougemont Quebec Monthly Meetings

Every 4th Sunday of every month, a monthly meeting is held in Rougemont.